Abstract

While training robots to navigate autonomously is a growing area of research, collecting large and diverse datasets from real environments is expensive and time-consuming. We seek to alleviate this problem by creating an autonomous agent trained inside a simulation created with Unreal Engine. We first create a dataset of over 10,000 images taken from within the simulation. Then, we use this data to train a model using different architectures to determine which one performs the best inside of the simulation. Finally, we evaluate the model’s performance in a different simulation to determine if the learning can be generalized. We found that the model trained on our data set could make it through a considerable amount of the mazes simulated in Unreal Engine, but failed to find similar success in other simulated environments.

Introduction

With improving technology, graphics processing units (GPUs) are utilized more in computer graphics. One field that has reaped the benefits of GPU improvement is simulations. Physics simulations that required data parallelism and intensive computation are now more accessible than ever. One application of simulation is in autonomous navigation. The high cost of building and maintaining a robot that can autonomously traverse terrain makes it challenging to implement computational intelligence. One way to test artificial intelligence in robots is through simulations. We are interested in examining neural network performance across different simulations and real life. In particular, we are looking to see how an agent can traverse a maze using a neural network (NN). We want to observe a robot learn to navigate a terrain, identifying a path to reach a goal.

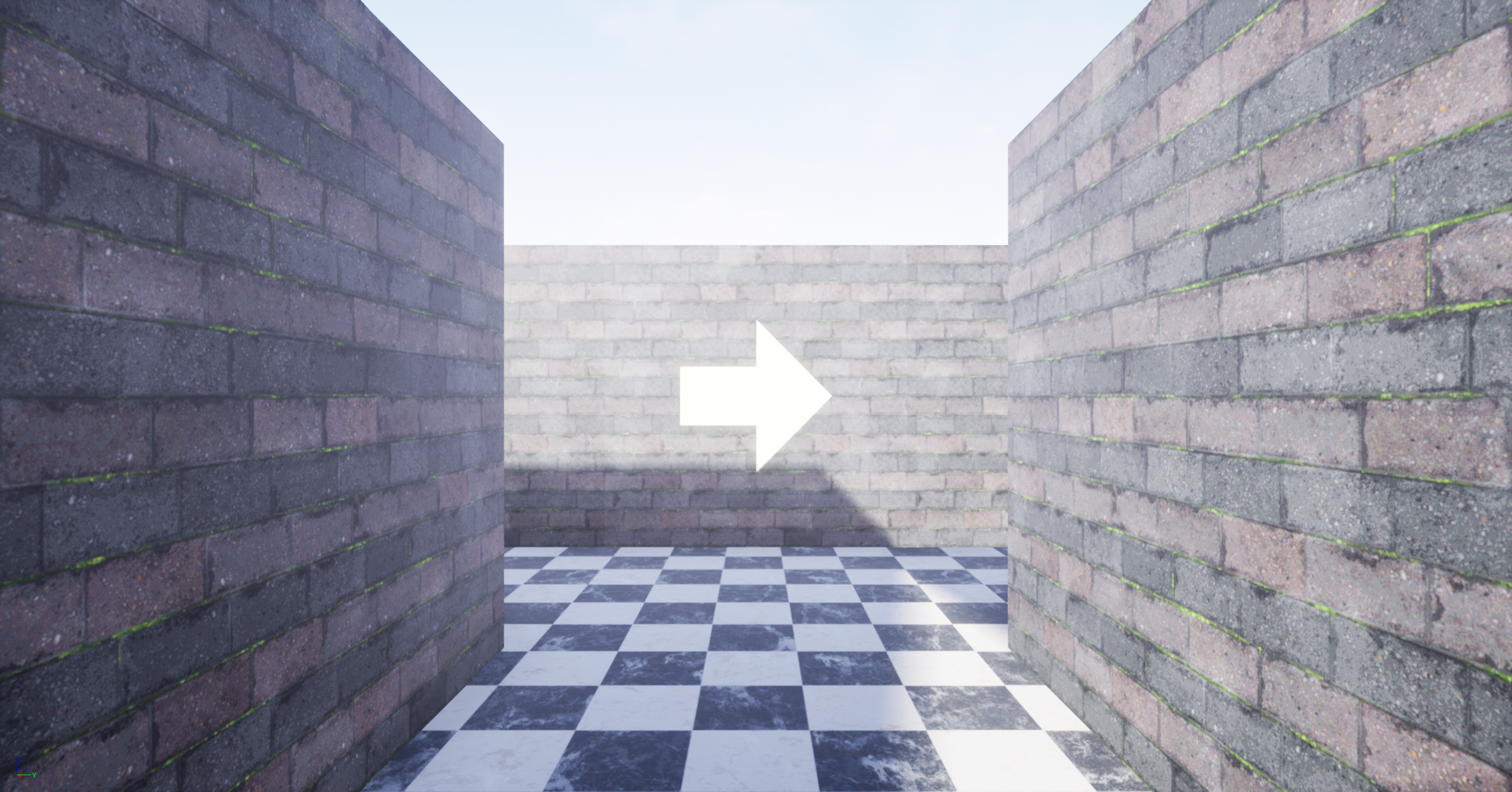

NNs are useful in that we can feed in images to a model and have it return a direction for which a robot can interpret and move accordingly. For this cross-simulation experiment, we have selected a handful of architectures that were trained in a Raycast simulation. We believe they will cross the reality gap best in the Unreal simulation. Such architectures include ResNet, AlexNet, and an original architecture that takes an image and concatenates the previous command string. The Unreal simulation serves as a proxy for how well the models might perform in real life. First, we train models in the Unreal simulation and see how they perfom in the environment they were trained in. After, we train models in the Unreal simulation and observe their performance in the raycasting simulation.

Read our full paper here!